So, in this installment of Command Line Goodness, I am going to cover a few different utilities that I’m certain will prove useful in your investigations going forward.

Once again, I am going to use my own FireFox history as my example file. For those of you who don’t know, this file is located in C:\Documents and Settings\

Now, to make things easier on myself, I use the UnxUtils “ls” instead of the native Windows “dir”. It’s quick, has numerous command options, and I don’t have to flip flop back and forth trying to remember if I am on a *nux or DOS command prompt (something you will come to appreciate the more you use command line utilities).

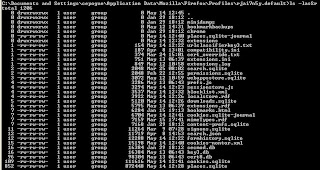

So, I use ls to list all of the contents of my current working directory (frequently referred to as the CWD). And I get something that looks like this…

OK…so what…that’s not very kewl Chris…well…like I said, there are plenty of command options that will make your life easier. In particular, I am going to use the options to provide me with long listing (more information such as file permissions, ownership, and last access time) , sorted by last access time, as well as by size.

OK…so the screenshot above is a bit kewler. It provides me some additional information as well as it has sorted the files by last access time, recursively (newest files on the bottom). I can also issue the same command without the “r” (for recursive) and the newest files will appear at the top.

I can also sort by size, which is very helpful with trying to quickly locate either large or small files. In this case, my browser history is likely a pretty large file, so I am going to sort recursively by size, placing the largest file on the bottom.

OK…now I can see that my largest file is indeed my places.sqlite, which is my full browser history (and I didn’t have to eyeball file sizes…nice huh!).

Next, I want to make a copy of that file in my tools directory since that’s where all of my command line tools are located. This is NOT a necessary step if you have your command line tools either in system32, or you have added your tools directory to your default path.

So, to make the copy, I will issue this command…

> cp places.sqlite c:\tools

Now that I have a copy of my browser history in my tools directory, I am going extract the printable characters with the “strings” command…like so…

> strings places.sqlite > web_history_strings.txt

***something to remember…you have this really kewl thing called “command line completion or tab completion”…which means that once you have hit a sufficient delimiter, you can simply hit the tab key and the rest of the command will be completed for you. In this example, I typed “str” and hit tab, and “strings” was automagically filled in for me! I did the same thing with “pla” TAB…and blamo…the rest of the filename was filled in for me. Very kewl. Use this for long path names as well…like “Documents and Settings”, “Program Files”, or “Application Data”.***

Now, I JUST want to see any hits on the keyword “forensics”. To do that, I use the grep command, making sure to use the “-i” option, since I don’t want to limit my search by case. So my command would look like this…

Now you all have a window into my secret habits of web surfing…oooohhh. This is a pretty simple example, but you can see where you have the ability to grep through large text files in a very short period of time based on specific search criteria. Just remember, if you search string has spaces in it, you need to put them in double quotes. To illustrate this, I am going to use a test timeline I created of my local system. If you don’t know how to do this, please refer to my blog series (Timeline Analysis).

In this example, I want to search for all activity that took place on March 15, 2010 (totally arbitrary date). My search would look like this…

I piped my output to “more” so that it would only show me one screen at a time…I can advance per line with the “Enter” key, and one page at a time with the “Space Bar”. As you can see, I could literally do the same thing with any date, and any time. Pretty slick and INSANELY useful when parsing timelines!

Now let’s say, you JUST want to see the hits on the dates of March 15th…nothing more…just the dates. Since this output is pretty clean, it’s pretty easy to parse using gawk (Windows port of the *nix utility “awk”). If you look at the output on the screenshot above you will see that it’s separated by spaces. If you count from left to right, assign each field a number. In this example, the “day” field would be number 1, the “month” field would be number 2, the “date” field would be number 3…and so n…you get the idea. So, now, if I JUST want to see the date field, I could use gawk to print field number 3…like so…

Now you might be thinking…ok Chris, that’s neat and all, but when could I use that in a real investigation. Well, think of this….say you have a HUGE text file of credit card transactions and you know that the fraud was only committed against a certain type of card…let’s say GOLD cards. You know that the Bank Identification number is 1234 for the specific issuing bank you are working with and you know that the sub-bin for their GOLD cards is 567. You can pull out JUST the field with the card numbers, then grep out the GOLD cads based on what you know about the BIN and sub-bin..so…your command might look something like this…

>cat cc_file | gawk “{print $4}” | grep 1234567

This command would print ONLY field number 4 (which presumably would contain the CC numbers), then it would only display the numbers that met the criteria of having the numbers 1234567 in that specific order. If you wanted to get a count, you could also add this to the end of the command…

>cat cc_file | gawk “{print $4}” | grep 1234567 | sort | uniq | wc -l

By piping your output to sort, you will put all of the numbers in numerical order from lowest to highest, then by using the “uniq” command you will remove all duplicates, finally you are using “word count”…the command “wc” to count the number of lines. You could also provide a count for the number of times each card number appeared by adding the “-c” option to the uniq command. This would still remove the duplicate entries, but it would also provide the number of times that each number occurred, just to the left of the entry.

So, with this one simple command, you could parse your fatty text file by…

1. Printing JUST the field that contained the card numbers

2. Grep’ing out JUST the GOLD cards by BIN and sub-bin

3. Sort the numbers numerically

4. Remove the duplicate entries

5. Provide a count of the number of times each card appears

6. Provide the total number of GOLD cards in the file

Not too bad for a SINGLE command. Good luck doing that same thing with a GUI utility sucka!

As you can see, command line utilities like this are very powerful and can provide you with a slew of information very quickly. The key is to know what you are looking for, then map out the commands you need to do extract that data.

A good trick to getting this down in your head is to start with a small sample of your data…like 10 lines. Look at those lines and map out either on paper, or in your case notes what you are trying to do. No joke…it would be as simple as this…

“I want to extract all of the credit card numbers. Next, I ONLY want to see GOLD cards. Then I want to see how many GOLD card entries are present and provide a count of the number of times each card appears.”

Mapping your search criteria out like this can help you really zero in on exactly the data you are looking for…Sniper Forensics…it will also be helpful should you need to ask somebody like me for help. You could say…hey dood…here is my data, here is what I am looking to do, can you help me with the command structure. Then, as you begin to figure this stuff out, create a text file called, “Commands” or “Useful Commands” or whatever…and keep track of which commands do what. That way you A) Don’t have to ask the same question more than once (which really makes you look like a tool), and B) will help you to get more efficient at command line goodness…which again, will really take your investigations to an entirely new level.

Happy hunting!

No comments:

Post a Comment